GPU Parallel Programming

GPU computing is one potential method that could be utilized to increase the time it takes when processing this data. There are several areas of interest that we have:

- What statistical and machine learning methods can be utilized with GPUs

- Evaluation and Performance metrics of utilizing R with GPUs

- What are the best Epidemiological and Health Services Research problems that are best suited to GPUs

- How best to develop code that utilizes the processors and memory associated with it.

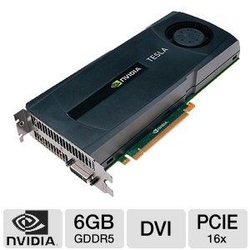

Nvidia Telsa (NvidiaEpi01)

We configured a computer to host these two C2075 cards. We decided to use a Nvidia branded case (pictured). The hardware configuration is as follows:

- NvidiaEpi01 (Intel i7 2600K 3.4ghz, 16gb memory, 1tb, 2xTesla C2075 (6gb + 448 cuda cores))

Two other computers have been built since.

- NvidiaEpi02 (AMD 8-core, 32gb memory, 256ssd+3tb, GTX 260 (896mb + 192 cuda cores))

- NvidiaEpi03 (AMD 8-core, 32gb memory, 256ssd+3tb, Tesla C1060 (4gb + 240 cuda cores) + Nvidia GeForce 640 (4gb + 384 cores))

Since, we were working with R we needed to set up the computer with Ubuntu Linux, as one of the R packages we wanted to use (GPUTools) was only supported in Linux.

Nvidia Tesla C2075 cards

- 448 Cuda Cores

- 6gb memory

The C2075 is a Fermi architecture with 6GB memory, 14 multiprocessors, and 32 cores per processor (448 cuda cores). It is connected by PCIe with a speed of 144GB/s.

R Packages that work with Nvidia Telsa Cards

- GPUTools (http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2796814/pdf/btp608.pdf)

- CudaBayesReg (http://journal.r-project.org/archive/2010-2/RJournal_2010-2_Ferreira~da~Silva.pdf and http://www.jstatsoft.org/v44/i04/paper)

- CUtil (http://web.warwick.ac.uk/statsdept/useR-2011/abstracts/290311-user2011_abstract_rev3.pdf)

- ROpenCL (http://repos.openanalytics.eu/html/ROpenCL.html)

Other References:

- http://web.warwick.ac.uk/statsdept/user2011/TalkSlides/Contributed/16Aug_1115_FocusI_3-HighPerfComp_1-Ligtenberg.pdf

- High Performance Data Mining Using R on Heterogeneous Platforms (http://users.eecs.northwestern.edu/~choudhar/Publications/HighPerformanceDataMiningUsingRHeterogeneousPlatforms.pdf)

- A Short Note on Gaussian Process Modeling for Large Datasets using Graphics Processing Units (http://arxiv.org/pdf/1203.1269v2.pdf)

- OBANSoft: integrated software for Bayesian statistics and high performance computing with R (http://web.warwick.ac.uk/statsdept/useR-2011/abstracts/300311-user2011_obansoft.pdf)

- Data Analysis using the R Project for Statistical Computing (https://www.nersc.gov/assets/DataAnalytics/2011/TutorialR2011.pdf)

- Writing Efficient and Parallel Code in R (http://www.statistik.tu-dortmund.de/~ligges/useR2012/Writing_efficient_and_parallel_code_in_R.pdf)

| R Function | Package | Package Function | Description | System |

|---|---|---|---|---|

| hclust | GPUTools | gpuHclust | Linux | |

| svm | GPUTools | gpuSvmTrain | Support Vector Machine of package e1071 | Linux |

| cor | GPUTools | gpuCor | Linux | |

| granger.test | GPUTools | gpuGranger | granger.test of package MSBVAR | Linux |

| GPUTools | gpuMi | Linux | ||

| rhierLinearModel | CudaBayesReg | rhierLinearModel | Bayesm package | Linux |

| ROpenCL | Linux |

GPU R Tutorials

note: Linux Only

Installing GPU Packages in R

http://www.r-tutor.com/gpu-computing/rpud-installation

Hierarchical Cluster Analysis

http://www.r-tutor.com/gpu-computing/clustering/hierarchical-cluster-analysis

SVM with R and GPU

http://www.r-tutor.com/gpu-computing/svm/rpusvm-1

GPU Databases

Other GPU Machine Learning (non-R)

GPUMLib is an open source Graphic Processing Unit Machine Learning Library.

http://gpumlib.sourceforge.net/

Version 0.1.9 includes:

- Back-Propagation (BP)

- Multiple Back-Propagation (MBP)

- Non-Negative Matrix Factorization (NMF)

- Radial Basis Function Networks (RBF)

- Autonomous Training System (ATS) for creating BP and MBP networks

- Neural Selective Input Model (NSIM) for BP and MPB (NSIM allows neural networks to handle missing values directly)

- Restricted Boltzmann Machines (RBM)

- Deep Belief Networks (DBN)

Python Random Forests on GPU (CudaTree)

http://blog.explainmydata.com/2013/10/training-random-forests-in-python-using.html

Anaconda Accelerate

Fast Python for GPUs and multi-core with NumbaPro and MKL Optimizations

https://store.continuum.io/cshop/accelerate/

Neural Networks on GPU

- http://www.neuroinformatics2011.org/abstracts/speeding-25-fold-neural-network-simulations-with-gpu-processing

- http://arstechnica.com/science/2011/07/running-high-performance-neural-networks-on-a-gamer-gpu